Hi,

Got some time to put calibration working in a simple way (I think) and with good results.

To perform calibration we capture images from one or more chessboards.

This can be done using live feed from cameras or from a video file or image sequence.

Results of the calibration progress are presented immediately to the user.

We can evaluate the captures and decide if we want to keep or remove them from the calibration list.

We can have some chessboards incorrectly identified and they must be suppressed from the calibration, but this has to be done manually.

Sources are calibrated separately and the stereo is calibrated in the end.

One of the new features, is the possibility to use more than one chessboard in each image.

The limitation here, is to use same size chessboards with the same square size.

With a good pc everything works in a fast and smooth way. I'm concerned with slower ones, it will work, but takes more time.

Just to post some images on the progress.

Bellow one image of the mono calibration.

We can now calibrate devices individually and in stereo.

The image shows in the left the camera capture with an overlay of the captured chessboards.

On the right the captured chessboards list (we can remove the bad ones if we wish).

On the middle the undistorted result.

I also added a way to capture chessboards from the screen.

Connect the pc to a TV or projector to get bigger calibration patterns and calibrate the cameras without the need to print the chessboards.

This has the advantage of using an already planar surface.

I also got two 360º lenses to test omnidirectional reconstruction.

The calibration seems to work well already.

Some news soon...

Edit:

Just to add a spoiler...

29 February 2016

28 January 2016

What's the progress in PVS2?

Got some requests asking for news, so here they are:

I moved a bit from the path I was working on and decided to make my first WPF interface, better looking, more modern and I get the chance to learn something new.

So now we have a WPF interface built in C# around a C++ DLL.

I used this chance to create my own WPF borderless windows (FlyPT Window) looking for a design more to my liking and hopefully more professional.

I have also created a new library for video capture (FlyPT Video Capture), based Microsoft on Media Foundation, with the intention to better understand the system and solve some problems with the libraries I was using.

I'm now prepared to capture from cameras/boards with more than one stream, since I can select the stream for capture.

I can also control all the camera parameters without errors (one of the problems I had).

I'm right now dedicating more time to calibration, trying to achieve a better, faster and more reliable result.

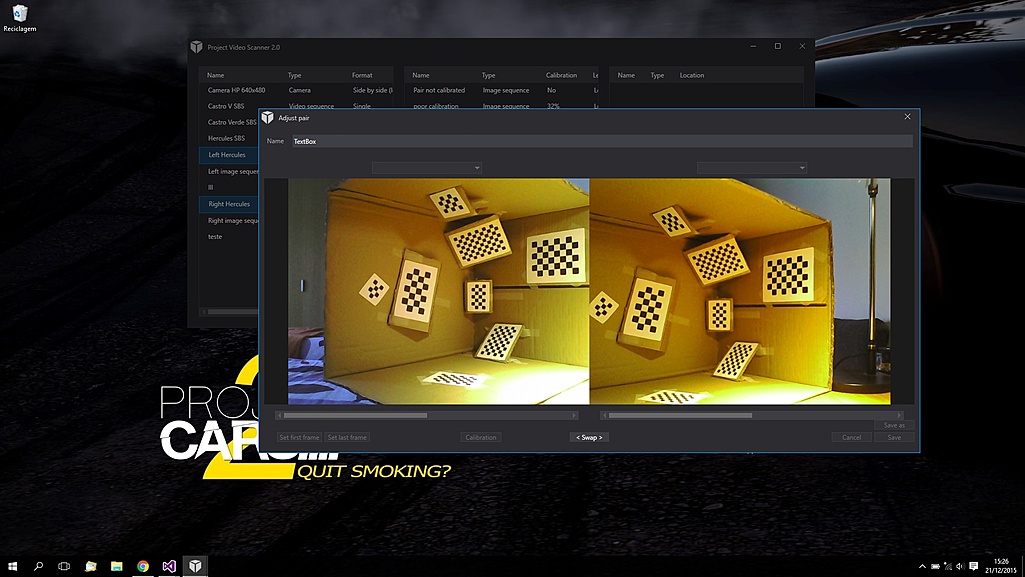

To test my new calibration system I made a small test rig.

It's a cardboard box with multiple chequerboards, all of them with different sizes so they can be distinguished.

The idea behind this method, is to calibrate the cameras with one shot:

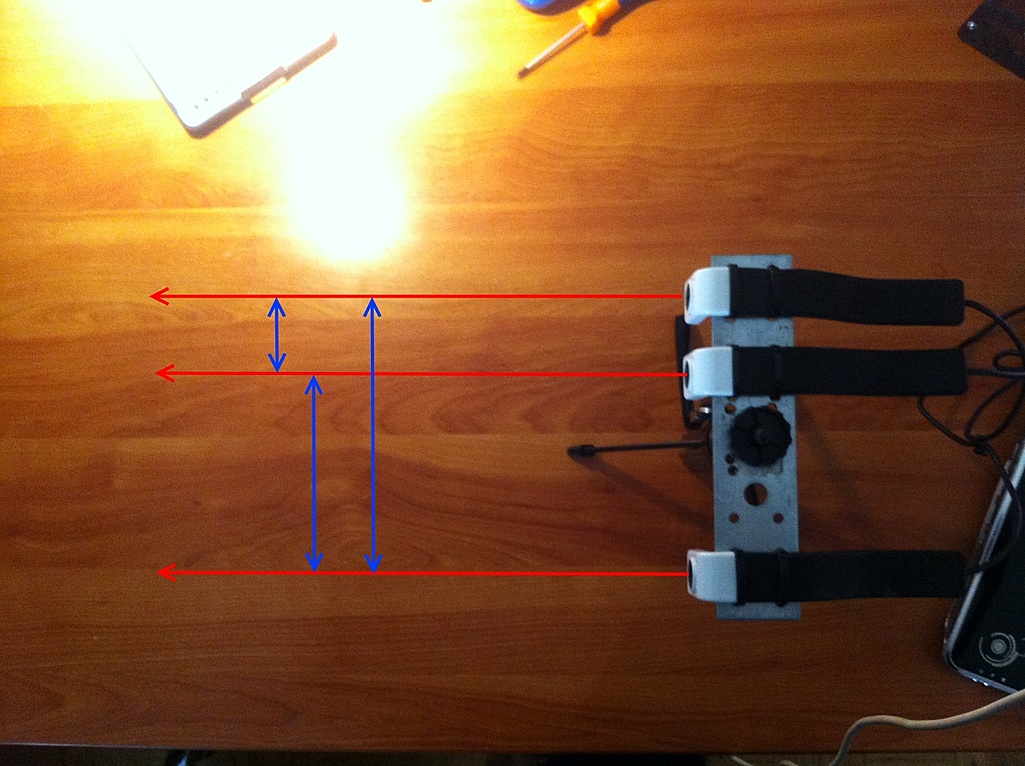

To test the calibration I also built a new camera rig:

Now with 3 possible ranges. One way to enhance camera dislocation calculation and better range on reconstruction:

Beside all this I'm working on the depth maps, testing some Cuda accelerated solutions.

That's it for now..

24 July 2015

PVS 2.0

Ok it's time to show what are the new features for PVS 2.0.

So all this is made as a leisure project, so don't expect something made from day to night. It will take time.

New version comes after some feedback I got from users and some features they suggested.

Other features where already in the list for upgrades.

So what's to come:

-New interface with new logic, taking in account the re-usability data.

If you make a calibration for a rig, we should be able to use that calibration each time we use that rig.

-Use different lens types.

In the current PVS we can't use fish eye lenses.

With this feature we will be able to use GoPro cameras.

I'm also working on single camera rigs with mirrors for stereo and home made omnidirectional lenses (360º).

Keep an eye open for some DIY.

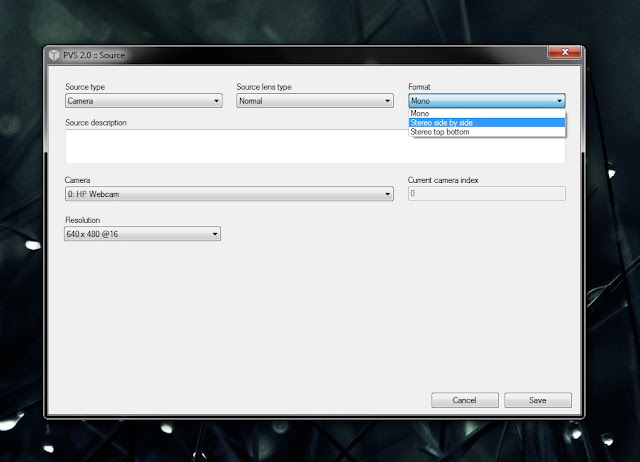

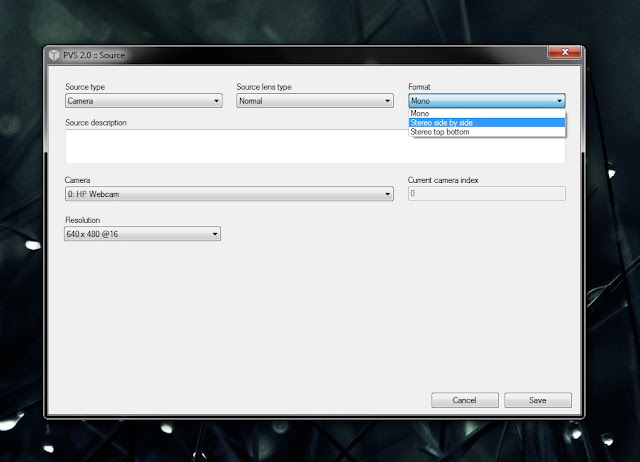

-Use multiple types of sources.

Use as source, videos, sequences of still pictures and live camera feed (real time if processing power allows).

-Allow the use of more codecs.

-Use multiple cameras.

Instead of using one stereo pair, give the possibility to join more cameras to enhance reconstruction area and camera dislocation precision (one of the big problems right now).

-Introduce camera dislocation correction with GPS data obtained from phones or other hardware.

The GPS information should be introduced during capture and associated with a specific frame or in the updated correction module.

With this we should be able to correctly orient the point cloud and correct drift errors.

-Modularity and plugin usability.

In the current version, modules where already independent and easily replaced. For 2.0 I want the possibility to add new algorithms easily.

If we get a better algorithm for camera tracking, we should be able to add it to the program as a plugin and be available as an option.

-Modularity should take account of multiprocessing, allowing multiple modules to run simultaneously and multiple times.

-We should be able to stop processing any time without loosing the work done until that moment like the current PVS.

Bugs and crashes will be more supportable.

-Maybe add more post point cloud processing.

Like sub-sampling, mesh creation and texture extraction.

-Enhance all algorithms for speed and better results.

Specially for camera dislocation tracking. I would like to use this with an hand held rig, allowing for less subtle movements and better cornering tracking.

So it's a big list!

I already started work, so keep an eye open for news.

I will try to post the developments.

So all this is made as a leisure project, so don't expect something made from day to night. It will take time.

New version comes after some feedback I got from users and some features they suggested.

Other features where already in the list for upgrades.

So what's to come:

-New interface with new logic, taking in account the re-usability data.

If you make a calibration for a rig, we should be able to use that calibration each time we use that rig.

-Use different lens types.

In the current PVS we can't use fish eye lenses.

With this feature we will be able to use GoPro cameras.

I'm also working on single camera rigs with mirrors for stereo and home made omnidirectional lenses (360º).

Keep an eye open for some DIY.

-Use multiple types of sources.

Use as source, videos, sequences of still pictures and live camera feed (real time if processing power allows).

-Allow the use of more codecs.

-Use multiple cameras.

Instead of using one stereo pair, give the possibility to join more cameras to enhance reconstruction area and camera dislocation precision (one of the big problems right now).

-Introduce camera dislocation correction with GPS data obtained from phones or other hardware.

The GPS information should be introduced during capture and associated with a specific frame or in the updated correction module.

With this we should be able to correctly orient the point cloud and correct drift errors.

-Modularity and plugin usability.

In the current version, modules where already independent and easily replaced. For 2.0 I want the possibility to add new algorithms easily.

If we get a better algorithm for camera tracking, we should be able to add it to the program as a plugin and be available as an option.

-Modularity should take account of multiprocessing, allowing multiple modules to run simultaneously and multiple times.

-We should be able to stop processing any time without loosing the work done until that moment like the current PVS.

Bugs and crashes will be more supportable.

-Maybe add more post point cloud processing.

Like sub-sampling, mesh creation and texture extraction.

-Enhance all algorithms for speed and better results.

Specially for camera dislocation tracking. I would like to use this with an hand held rig, allowing for less subtle movements and better cornering tracking.

So it's a big list!

I already started work, so keep an eye open for news.

I will try to post the developments.

20 July 2015

New demo video

It's now available a new video filmed in Castro Verde (Portugal).

There's no cuts.

The idea is to get a direct experience of the problems we might have with the reconstructions.

Download the video in two sections at:

Castro Verde 1 (1.9 GB)

Castro Verde 2 (1.26 GB)

In PVS, on the reconstruction tab, select that video for reconstruction.

Play with the settings.

The video was captured at various speeds, mainly at 30 km/h, but with 0 and 50 km/h moments.

Bye!

PS: Wait for news! PVS 2.0 on the works!

There's no cuts.

The idea is to get a direct experience of the problems we might have with the reconstructions.

Download the video in two sections at:

Castro Verde 1 (1.9 GB)

Castro Verde 2 (1.26 GB)

In PVS, on the reconstruction tab, select that video for reconstruction.

Play with the settings.

The video was captured at various speeds, mainly at 30 km/h, but with 0 and 50 km/h moments.

Bye!

PS: Wait for news! PVS 2.0 on the works!

2 April 2014

From point cloud to mesh

Hi,

Now that we have point clouds what can we do with them?

Well my first idea was to use them as a reference to create meshes in a 3D editor.

But if you want to make a mesh from the point cloud itself, how can we do it?

This is a basic tutorial to create meshes from point clouds in Cloud Compare.

This is a basic tutorial to create meshes from point clouds in Cloud Compare.

For that we need to install Cloud Compare.

Just go to: http://www.danielgm.net/cc/ and download the program.

It's free but you can make a donation to the creator, he really deserves.

PVS creates point clouds and the ones from the sample project are stored in the folder ".../PVS/Sample Project/Reconstruction/Absolute". They are in the ASCII PLY format.

If you are interested in knowing more about the format, please visit http://paulbourke.net/dataformats/ply/ .

Ok now let's start:

1 - Open Cloud Compare and drag the point clouds you want to treat inside.

2 - Cloud compare asks for each point cloud some questions, just click "Ok" for all. This may take a while.

If you are aware of a faster way, please share with me.

3 - You will see all the point clouds in the viewer.

Usually I change from orthographic projection to object centred projection. For that use the button on the left pane and change from

3 - The point clouds are separated, let's merge them.

Keep the "Alt" key pressed and with the mouse select all the points on the viewer or in the left list, select all the point clouds.

Now go to the menu and select "Edit->Merge".

4 - Now we have a merged point cloud.

We need to sub sample the point cloud to remove overlapping points and to get a workable mesh.

Once again, select the point cloud you want to sub sample.

Next go to the menu and select "Edit->Subsample".

We now have two point clouds. The original one (now hidden) and the sub sampled one.

5 - Now let's make the mesh.

You must have the sub sampled point cloud selected.

On the menu select "Edit->Mesh->Delaunay 2D (axis aligned plane)"

The mesh is created. Uncheck "Normals" to see the coloured faces and check "Wireframe" to see the wire frame.

6 - Ok this is a bit chaotic, let's smooth the surfaces.

Select the mesh and on the menu go to "Edit->Mesh->Smooth (Laplacian)".

Once again, uncheck "Normals" to see the coloured faces and check "Wireframe" to see the wire frame.

That's it, you now have a smoothed mesh reconstruction.

Play with the values.

Now that we have point clouds what can we do with them?

Well my first idea was to use them as a reference to create meshes in a 3D editor.

But if you want to make a mesh from the point cloud itself, how can we do it?

This is a basic tutorial to create meshes from point clouds in Cloud Compare.

This is a basic tutorial to create meshes from point clouds in Cloud Compare.For that we need to install Cloud Compare.

Just go to: http://www.danielgm.net/cc/ and download the program.

It's free but you can make a donation to the creator, he really deserves.

PVS creates point clouds and the ones from the sample project are stored in the folder ".../PVS/Sample Project/Reconstruction/Absolute". They are in the ASCII PLY format.

If you are interested in knowing more about the format, please visit http://paulbourke.net/dataformats/ply/ .

Ok now let's start:

1 - Open Cloud Compare and drag the point clouds you want to treat inside.

2 - Cloud compare asks for each point cloud some questions, just click "Ok" for all. This may take a while.

If you are aware of a faster way, please share with me.

3 - You will see all the point clouds in the viewer.

Usually I change from orthographic projection to object centred projection. For that use the button on the left pane and change from

to

3 - The point clouds are separated, let's merge them.

Keep the "Alt" key pressed and with the mouse select all the points on the viewer or in the left list, select all the point clouds.

Now go to the menu and select "Edit->Merge".

4 - Now we have a merged point cloud.

We need to sub sample the point cloud to remove overlapping points and to get a workable mesh.

Once again, select the point cloud you want to sub sample.

Next go to the menu and select "Edit->Subsample".

- On the method I use "Space".

- I'm using 0,05 meters on the space between points.

- Click "Ok" and wait.

We now have two point clouds. The original one (now hidden) and the sub sampled one.

5 - Now let's make the mesh.

You must have the sub sampled point cloud selected.

On the menu select "Edit->Mesh->Delaunay 2D (axis aligned plane)"

- Select a value of 0.8 meters for the maximum edge length.

- Pres "Ok" and wait.

The mesh is created. Uncheck "Normals" to see the coloured faces and check "Wireframe" to see the wire frame.

6 - Ok this is a bit chaotic, let's smooth the surfaces.

Select the mesh and on the menu go to "Edit->Mesh->Smooth (Laplacian)".

- Keep default values.

- Click "Ok"and wait.

Once again, uncheck "Normals" to see the coloured faces and check "Wireframe" to see the wire frame.

That's it, you now have a smoothed mesh reconstruction.

Play with the values.

Subscribe to:

Comments (Atom)